40 machine learning noisy labels

Data fusing and joint training for learning with noisy labels Abstract. It is well known that deep learning depends on a large amount of clean data. Because of high annotation cost, various methods have been devoted to annotating the data automatically. However, a larger number of the noisy labels are generated in the datasets, which is a challenging problem. In this paper, we propose a new method for ... Noisy Labels in Remote Sensing Noisy Labels in Remote Sensing Deep learning (DL) based methods have recently seen a rise in popularity in the context of remote sensing (RS) image classification. Most DL models require huge amounts of annotated images during training to optimize all parameters and reach a high-performance during evaluation.

Normalized Loss Functions for Deep Learning with Noisy Labels Robust loss functions are essential for training accurate deep neural networks (DNNs) in the presence of noisy (incorrect) labels. It has been shown that the commonly used Cross Entropy (CE) loss is not robust to noisy labels. Whilst new loss functions have been designed, they are only partially robust.

Machine learning noisy labels

machine learning - Classification with noisy labels? - Cross Validated Works with sklearn/pyTorch/Tensorflow/FastText/etc. lnl = LearningWithNoisyLabels(clf=LogisticRegression()) lnl.fit(X = X_train_data, s = train_noisy_labels) # Estimate the predictions you would have gotten by training with *no* label errors. predicted_test_labels = lnl.predict(X_test) To find label errors in your dataset. Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise). Second, we propose a simple but highly effective method to overcome both synthetic and real-world noisy labels. PDF Learning with Noisy Labels - NeurIPS Noisy labels are denoted by ˜y. Let f: X→Rbe some real-valued decision function. Therisk of fw.r.t. the 0-1 loss is given by RD(f) = E (X,Y )∼D 1{sign(f(X))6= Y } The optimal decision function (called Bayes optimal) that minimizes RDover all real-valued decision functions is given byf⋆(x) = sign(η(x) −1/2) where η(x) = P(Y = 1|x).

Machine learning noisy labels. SELC: Self-Ensemble Label Correction Improves Learning with Noisy ... To overcome this problem, we present a simple and effective method self-ensemble label correction (SELC) to progressively correct noisy labels and refine the model. We look deeper into the memorization behavior in training with noisy labels and observe that the network outputs are reliable in the early stage. To retain this reliable knowledge ... [P] Noisy Labels and Label Smoothing : MachineLearning - reddit My best guess that this 'label smoothing' thing isn't going to change the optimal classification boundary at all (in a maximum-likelihood sense) if the "smoothing" is symmetrical wrt. the labels, and even the non-symmetric case can be addressed in a rather more straightforward way, simply by adjusting the weight of more "uncertain" points in the dataset. Active label cleaning for improved dataset quality under ... - Nature Imperfections in data annotation, known as label noise, are detrimental to the training of machine learning models and have a confounding effect on the assessment of model performance.... Tag Page - L7 To our surprise, label errors are pervasive across 10 popular benchmark test sets used in most machine learning research, destabilizing benchmarks. We often deal with label errors in datasets, but no common framework exists to support machine learning research and benchmarking with label noise. Announcing cleanlab: a Python package for finding ...

Deep learning with noisy labels: Exploring techniques and remedies in ... Most of the methods that have been proposed to handle noisy labels in classical machine learning fall into one of the following three categories ( Frénay and Verleysen, 2013 ): 1. Methods that focus on model selection or design. Fundamentally, these methods aim at selecting or devising models that are more robust to label noise. How to handle noisy labels for robust learning from uncertainty We calculate the sampling rates R v and R t, which control how much small loss data should be selected during each training step: (5) R v = ν × | V |, R t = R ( e) × | t | − γ R ( e) = 1 − min { e E k τ, τ } Here, the constant ν is a parameter used to select the learning mode according to the ratio of clean-likely labels. Learning Soft Labels via Meta Learning - Apple Machine Learning Research When applied to dataset containing noisy labels, the learned labels correct the annotation mistakes, and improves over state-of-the-art by a significant margin. Finally, we show that learned labels capture semantic relationship between classes, and thereby improve teacher models for the downstream task of distillation. PDF Selective-Supervised Contrastive Learning With Noisy Labels tags [36,55]. These labels are cheap but inevitably noisy. Noisy labels impair the generalization performance of deep networks [15,60]. It is because, supervised by the datasets with noisy labels, the mislabeled data provide in-correct signals when inducing latent representations for the instances. The corrupted representations then cause inac-

How to Improve Deep Learning Model Robustness by Adding Noise 4. # import noise layer. from keras.layers import GaussianNoise. # define noise layer. layer = GaussianNoise(0.1) The output of the layer will have the same shape as the input, with the only modification being the addition of noise to the values. Event-Driven Architecture Can Clean Up Your Noisy Machine Learning Labels Machine learning requires a data input to make decisions. When talking about supervised machine learning, one of the most important elements of that data is its labels . In Riskified's case, the ... How Noisy Labels Impact Machine Learning Models | iMerit Can ML systems trained with noisy labels operate effectively? Studies have shown that under certain conditions, ML systems trained with mislabeled data can function well. For example, a 2018 MIT/Cornell University study tested the accuracy of ML image classification systems trained with various levels of label noise. They found that the ML systems could maintain good performance with high levels of label noise under the following conditions: How Noisy Labels Impact Machine Learning Models - KDnuggets While this study demonstrates that ML systems have a basic ability to handle mislabeling, many practical applications of ML are faced with complications that make label noise more of a problem. These complications include: Not being able to create very large training sets, and Systematic labeling errors that confuse machine learning.

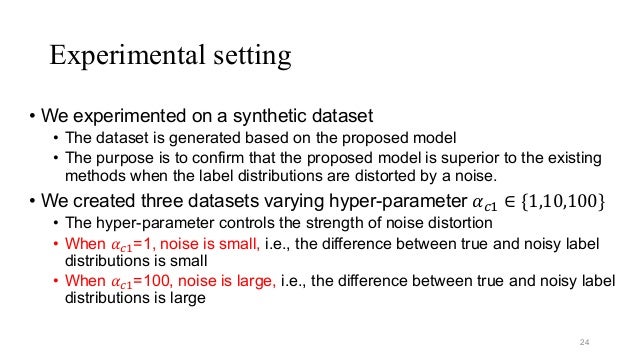

Data Noise and Label Noise in Machine Learning Asymmetric Label Noise All Labels Randomly chosen α% of all labels i are switched to label i + 1, or to 0 for maximum i (see Figure 3). This follows the real-world scenario that labels are randomly corrupted, as also the order of labels in datasets is random [6]. 3 — Own image: asymmetric label noise Asymmetric Label Noise Single Label

Dealing with noisy training labels in text ... - Stack Overflow Works with sklearn/pyTorch/Tensorflow/FastText/etc. lnl = LearningWithNoisyLabels (clf=LogisticRegression ()) lnl.fit (X = X_train_data, s = train_noisy_labels) # Estimate the predictions you would have gotten by training with *no* label errors. predicted_test_labels = lnl.predict (X_test)

Meta-learning from noisy labels :: Päpper's Machine Learning Blog ... Label noise introduction Training machine learning models requires a lot of data. Often, it is quite costly to obtain sufficient data for your problem. Sometimes, you might even need domain experts which don't have much time and are expensive. One option that you can look into is getting cheaper, lower quality data, i.e. have less experienced people annotate data. This usually has the ...

Learning from Noisy Labels with Deep Neural Networks: A Survey As noisy labels severely degrade the generalization performance of deep neural networks, learning from noisy labels (robust training) is becoming an important task in modern deep learning applications. In this survey, we first describe the problem of learning with label noise from a supervised learning perspective.

Do Machine Learning Without Code. Fun Websites To Experiment With Machine… | by randerson112358 ...

subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2021-IJCAI - Towards Understanding Deep Learning from Noisy Labels with Small-Loss Criterion. 2022-WSDM - Towards Robust Graph Neural Networks for Noisy Graphs with Sparse Labels. 2022-Arxiv - Multi-class Label Noise Learning via Loss Decomposition and Centroid Estimation.

Learning Graph Neural Networks with Noisy Labels - DeepAI Learning with noisy labels. In Advances in neural information processing systems, pp. 1196-1204, 2013. Patrini et al. (2016) Giorgio Patrini, Frank Nielsen, Richard Nock, and Marcello Carioni. Loss factorization, weakly supervised learning and label noise robustness. In International conference on machine learning, pp. 708-717, 2016.

An Introduction to Classification Using Mislabeled Data The performance of any classifier, or for that matter any machine learning task, depends crucially on the quality of the available data. Data quality in turn depends on several factors- for example accuracy of measurements (i.e. noise), presence of important information, absence of redundant information, how much collected samples actually represent the population, etc.

Impact of Noisy Labels in Learning Techniques: A Survey The method of elimination of noisy labels in deep learning approach is further classified into a robust loss function and modeling latent variable. ... D., & Laird, P. (1988). Learning from noisy examples. Machine Learning, 2(4), 343-370. Google Scholar Azadi, S., Feng, J., Jegelka, S., & Darrell, T. (2015). Auxiliary image regularization for ...

Deep learning with noisy labels: Exploring techniques and remedies in ... There is a growing interest in obtaining such datasets for medical image analysis applications. However, the impact of label noise has not received sufficient attention. Recent studies have shown that label noise can significantly impact the performance of deep learning models in many machine learning and computer vision applications.

PDF Learning with Noisy Labels - Carnegie Mellon University Noisy labels are denoted by ˜y. Let f: X→Rbe some real-valued decision function. Therisk of fw.r.t. the 0-1 loss is given by RD(f) = E (X,Y )∼D 1{sign(f(X))6= Y } The optimal decision function (called Bayes optimal) that minimizes RDover all real-valued decision functions is given byf⋆(x) = sign(η(x) −1/2) where η(x) = P(Y = 1|x).

PDF Learning with Noisy Labels - NeurIPS Noisy labels are denoted by ˜y. Let f: X→Rbe some real-valued decision function. Therisk of fw.r.t. the 0-1 loss is given by RD(f) = E (X,Y )∼D 1{sign(f(X))6= Y } The optimal decision function (called Bayes optimal) that minimizes RDover all real-valued decision functions is given byf⋆(x) = sign(η(x) −1/2) where η(x) = P(Y = 1|x).

Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise). Second, we propose a simple but highly effective method to overcome both synthetic and real-world noisy labels.

machine learning - Classification with noisy labels? - Cross Validated Works with sklearn/pyTorch/Tensorflow/FastText/etc. lnl = LearningWithNoisyLabels(clf=LogisticRegression()) lnl.fit(X = X_train_data, s = train_noisy_labels) # Estimate the predictions you would have gotten by training with *no* label errors. predicted_test_labels = lnl.predict(X_test) To find label errors in your dataset.

Post a Comment for "40 machine learning noisy labels"